[Preview] v1.81.12 - Guardrail Policy Templates & Action Builder

Deploy this version

- Docker

- Pip

docker run \

-e STORE_MODEL_IN_DB=True \

-p 4000:4000 \

ghcr.io/berriai/litellm:main-v1.81.12.rc.1

pip install litellm==1.81.12.rc1

Key Highlights

- Policy Templates - Pre-configured guardrail policy templates for common safety and compliance use-cases (including NSFW, toxic content, and child safety)

- Guardrail Action Builder - Build and customize guardrail policy flows with the new action-builder UI and conditional execution support

- MCP OAuth2 M2M + Tracing - Add machine-to-machine OAuth2 support for MCP servers and OpenTelemetry tracing for MCP calls through AI Gateway

- Responses API

shellTool &context_managementsupport - Server-side context management (compaction) and Shell tool support for the OpenAI Responses API - Access Groups - Create access groups to manage model, MCP server, and agent access across teams and keys

- 50+ New Bedrock Regional Model Entries - DeepSeek V3.2, MiniMax M2.1, Kimi K2.5, Qwen3 Coder Next, and NVIDIA Nemotron Nano across multiple regions

- Add Semgrep & fix OOMs - Static analysis rules and out-of-memory fixes - PR #20912

Add Semgrep & fix OOMs

This release fixes out-of-memory (OOM) risks from unbounded asyncio.Queue() usage. Log queues (e.g. GCS bucket) and DB spend-update queues were previously unbounded and could grow without limit under load. They now use a configurable max size (LITELLM_ASYNCIO_QUEUE_MAXSIZE, default 1000); when full, queues flush immediately to make room instead of growing memory. A Semgrep rule (.semgrep/rules/python/unbounded-memory.yml) was added to flag similar unbounded-memory patterns in future code. PR #20912

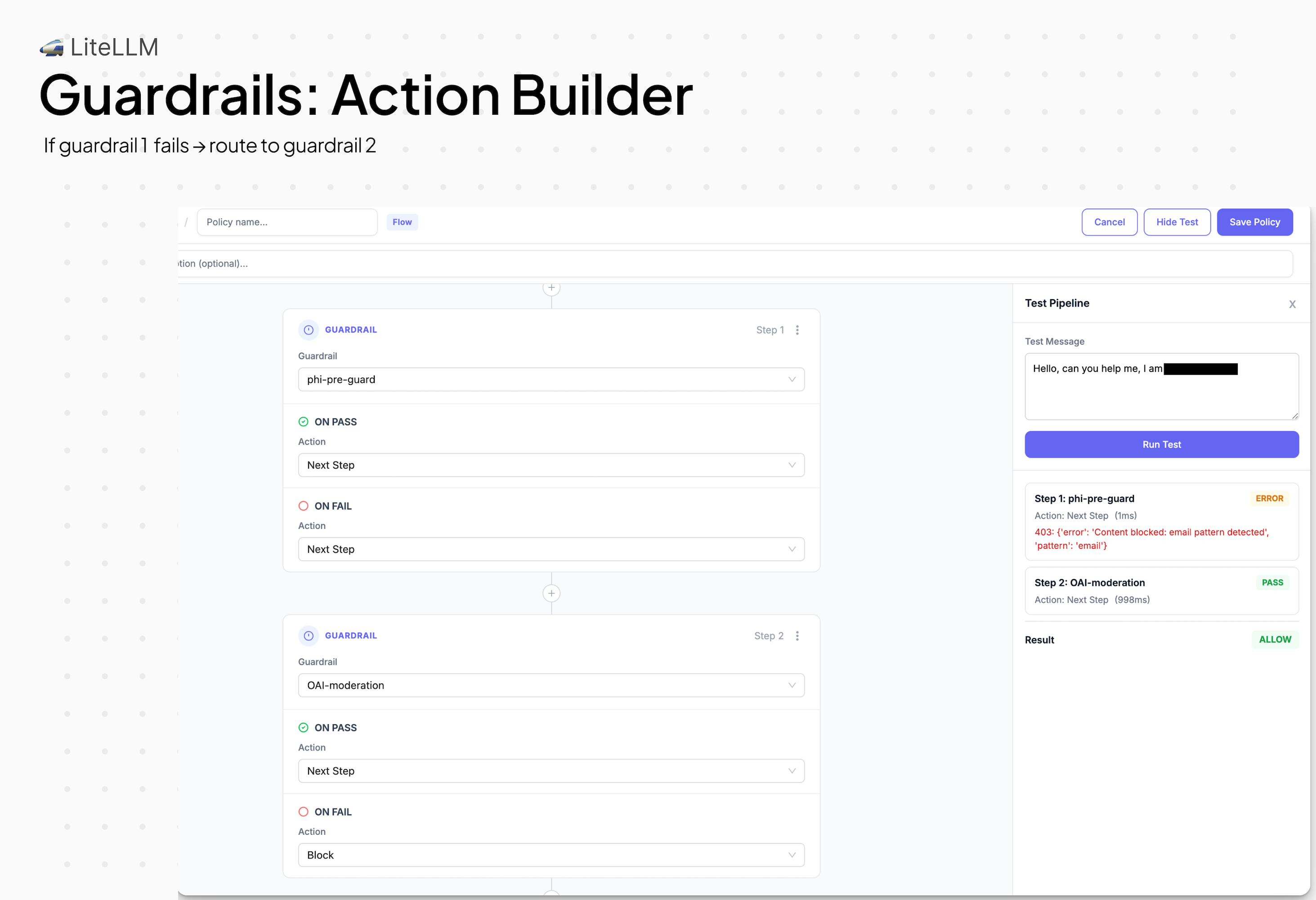

Guardrail Action Builder

This release adds a visual action builder for guardrail policies with conditional execution support. You can now chain guardrails into multi-step pipelines — if a simple guardrail fails, route to an advanced one instead of immediately blocking. Each step has configurable ON PASS and ON FAIL actions (Next Step, Block, or Allow), and you can test the full pipeline with a sample message before saving.

Access Groups

Access Groups simplify defining resource access across your organization. One group can grant access to models, MCP servers, and agents—simply attach it to a key or team. Create groups in the Admin UI, define which resources each group includes, then assign the group when creating keys or teams. Updates to a group apply automatically to all attached keys and teams.

New Providers and Endpoints

New Providers (2 new providers)

| Provider | Supported LiteLLM Endpoints | Description |

|---|---|---|

| Scaleway | /chat/completions | Scaleway Generative APIs for chat completions |

| Sarvam AI | /chat/completions, /audio/transcriptions, /audio/speech | Sarvam AI STT and TTS support for Indian languages |

New Models / Updated Models

New Model Support (19 highlighted models)

| Provider | Model | Context Window | Input ($/1M tokens) | Output ($/1M tokens) |

|---|---|---|---|---|

| AWS Bedrock | deepseek.v3.2 | 164K | $0.62 | $1.85 |

| AWS Bedrock | minimax.minimax-m2.1 | 196K | $0.30 | $1.20 |

| AWS Bedrock | moonshotai.kimi-k2.5 | 262K | $0.60 | $3.00 |

| AWS Bedrock | moonshotai.kimi-k2-thinking | 262K | $0.73 | $3.03 |

| AWS Bedrock | qwen.qwen3-coder-next | 262K | $0.50 | $1.20 |

| AWS Bedrock | nvidia.nemotron-nano-3-30b | 262K | $0.06 | $0.24 |

| Azure AI | azure_ai/kimi-k2.5 | 262K | $0.60 | $3.00 |

| Vertex AI | vertex_ai/zai-org/glm-5-maas | 200K | $1.00 | $3.20 |

| MiniMax | minimax/MiniMax-M2.5 | 1M | $0.30 | $1.20 |

| MiniMax | minimax/MiniMax-M2.5-lightning | 1M | $0.30 | $2.40 |

| Dashscope | dashscope/qwen3-max | 258K | Tiered pricing | Tiered pricing |

| Perplexity | perplexity/preset/pro-search | - | Per-request | Per-request |

| Perplexity | perplexity/openai/gpt-4o | - | Per-request | Per-request |

| Perplexity | perplexity/openai/gpt-5.2 | - | Per-request | Per-request |

| Vercel AI Gateway | vercel_ai_gateway/anthropic/claude-opus-4.6 | 200K | $5.00 | $25.00 |

| Vercel AI Gateway | vercel_ai_gateway/anthropic/claude-sonnet-4 | 200K | $3.00 | $15.00 |

| Vercel AI Gateway | vercel_ai_gateway/anthropic/claude-haiku-4.5 | 200K | $1.00 | $5.00 |

| Sarvam AI | sarvam/sarvam-m | 8K | Free tier | Free tier |

| Anthropic | fast/claude-opus-4-6 | 1M | $30.00 | $150.00 |

Note: AWS Bedrock models are available across multiple regions (us-east-1, us-east-2, us-west-2, eu-central-1, eu-north-1, ap-northeast-1, ap-south-1, ap-southeast-3, sa-east-1). 54 regional model entries were added in total.

Features

-

- Enable non-tool structured outputs on Claude Opus 4.5 and 4.6 using

output_formatparam - PR #20548 - Add support for

anthropic_messagescall type in prompt caching - PR #19233 - Managing Anthropic Beta Headers with remote URL fetching - PR #20935, PR #21110

- Remove

x-anthropic-billingblock - PR #20951 - Use Authorization Bearer for OAuth tokens instead of x-api-key - PR #21039

- Filter unsupported JSON schema constraints for structured outputs - PR #20813

- New Claude Opus 4.6 features for

/v1/messages- PR #20733 - Fix

reasoning_effort=Noneand"none"should return None for Opus 4.6 - PR #20800

- Enable non-tool structured outputs on Claude Opus 4.5 and 4.6 using

-

- Perplexity Research API support with preset search - PR #20860

-

- Add MiniMax-M2.5 and MiniMax-M2.5-lightning models - PR #21054

-

- Add

dashscope/qwen3-maxmodel with tiered pricing - PR #20919

- Add

-

- Add new Vercel AI Anthropic models - PR #20745

-

- Sync DeepSeek model metadata and add bare-name fallback - PR #20938

-

General

Bug Fixes

-

- Preserve

content_policy_violationerror details from Azure OpenAI - PR #20883

- Preserve

LLM API Endpoints

Features

-

- Add

target_model_namesfor vector store endpoints - PR #21089

- Add

-

General

Bugs

- General

- Stop leaking Python tracebacks in streaming SSE error responses - PR #20850

- Fix video list pagination cursors not encoded with provider metadata - PR #20710

- Handle

metadata=Nonein SDK path retry/error logic - PR #20873 - Fix Spend logs pickle error with Pydantic models and redaction - PR #20685

- Remove duplicate

PerplexityResponsesConfigfromLLM_CONFIG_NAMES- PR #21105

Management Endpoints / UI

Features

-

Access Groups

-

Policies

-

SSO / Auth

-

Spend Logs

-

UI Improvements

- Navbar: Option to hide Usage Popup - PR #20910

- Model Page: Improve Credentials Messaging - PR #21076

- Fallbacks: Default configurable to 10 models - PR #21144

- Fallback display with arrows and card structure - PR #20922

- Team Info: Migrate to AntD Tabs + Table - PR #20785

- AntD refactoring and 0 cost models fix - PR #20687

- Zscaler AI Guard UI - PR #21077

- Include Config Defined Pass Through Endpoints - PR #20898

- Rename "HTTP" to "Streamable HTTP (Recommended)" in MCP server page - PR #21000

- MCP server discovery UI - PR #21079

-

Virtual Keys

Bugs

- Logs: Fix Input and Output Copying - PR #20657

- Teams: Fix Available Teams - PR #20682

- Spend Logs: Reset Filters Resets Custom Date Range - PR #21149

- Usage: Request Chart stack variant fix - PR #20894

- Add Auto Router: Description Text Input Focus - PR #21004

- Guardrail Edit: LiteLLM Content Filter Categories - PR #21002

- Add null guard for models in API keys table - PR #20655

- Show error details instead of 'Data Not Available' for failed requests - PR #20656

- Fix Spend Management Tests - PR #21088

- Fix JWT email domain validation error message - PR #21212

AI Integrations

Logging

-

- Fix JSON serialization error for non-serializable objects - PR #20668

-

- Sanitize label values to prevent metric scrape failures - PR #20600

-

- Prevent empty proxy request spans from being sent to Langfuse - PR #19935

-

- Auto-infer

otlp_httpexporter when endpoint is configured - PR #20438

- Auto-infer

-

- Update CBF field mappings per LIT-1907 - PR #20906

-

General

- Allow

MAX_CALLBACKSoverride via env var - PR #20781 - Add

standard_logging_payload_excluded_fieldsconfig option - PR #20831 - Enable

verbose_loggerwhenLITELLM_LOG=DEBUG- PR #20496 - Guard against None

litellm_metadatain batch logging path - PR #20832 - Propagate model-level tags from config to SpendLogs - PR #20769

- Allow

Guardrails

-

Policy Templates

-

Pipeline Execution

-

- Add team policy mapping for ZGuard - PR #20608

-

General

- Add logging to all unified guardrails + link to custom code guardrail templates - PR #20900

- Forward request headers +

litellm_versionto generic guardrails - PR #20729 - Empty

guardrails/policiesarrays should not trigger enterprise license check - PR #20567 - Fix OpenAI moderation guardrails - PR #20718

- Fix

/v2/guardrails/listreturning sensitive values - PR #20796 - Fix guardrail status error - PR #20972

- Reuse

get_instance_fnininitialize_custom_guardrail- PR #20917

Spend Tracking, Budgets and Rate Limiting

- Prevent shared backend model key from being polluted by per-deployment custom pricing - PR #20679

- Avoid in-place mutation in SpendUpdateQueue aggregation - PR #20876

MCP Gateway (12 updates)

- MCP M2M OAuth2 Support - Add support for machine-to-machine OAuth2 for MCP servers - PR #20788

- MCP Server Discovery UI - Browse and discover available MCP servers from the UI - PR #21079

- MCP Tracing - Add OpenTelemetry tracing for MCP calls running through AI Gateway - PR #21018

- MCP OAuth2 Debug Headers - Client-side debug headers for OAuth2 troubleshooting - PR #21151

- Fix MCP "Session not found" errors - Resolve session persistence issues - PR #21040

- Fix MCP OAuth2 root endpoints returning "MCP server not found" - PR #20784

- Fix MCP OAuth2 query param merging when

authorization_urlalready contains params - PR #20968 - Fix MCP SCOPES on Atlassian issue - PR #21150

- Fix MCP StreamableHTTP backend - Use

anyio.fail_afterinstead ofasyncio.wait_for- PR #20891 - Inject

NPM_CONFIG_CACHEinto STDIO MCP subprocess env - PR #21069 - Block spaces and hyphens in MCP server names and aliases - PR #21074

Performance / Loadbalancing / Reliability improvements (8 improvements)

- Remove orphan entries from queue - Fix memory leak in scheduler queue - PR #20866

- Remove repeated provider parsing in budget limiter hot path - PR #21043

- Use current retry exception for retry backoff instead of stale exception - PR #20725

- Add Semgrep & fix OOMs - Static analysis rules and out-of-memory fixes - PR #20912

- Add Pyroscope for continuous profiling and observability - PR #21167

- Respect

ssl_verifywith shared aiohttp sessions - PR #20349 - Fix shared health check serialization - PR #21119

- Change model mismatch logs from WARNING to DEBUG - PR #20994

Database Changes

Schema Updates

| Table | Change Type | Description | PR | Migration |

|---|---|---|---|---|

LiteLLM_VerificationToken | New Indexes | Added indexes on user_id+team_id, team_id, and budget_reset_at+expires | PR #20736 | Migration |

LiteLLM_PolicyAttachmentTable | New Column | Added tags text array for policy-to-tag connections | PR #21061 | Migration |

LiteLLM_AccessGroupTable | New Table | Access groups for managing model, MCP server, and agent access | PR #21022 | Migration |

LiteLLM_AccessGroupTable | Column Change | Renamed access_model_ids to access_model_names | PR #21166 | Migration |

LiteLLM_ManagedVectorStoreTable | New Table | Managed vector store tracking with model mappings | - | Migration |

LiteLLM_TeamTable, LiteLLM_VerificationToken | New Column | Added access_group_ids text array | PR #21022 | Migration |

LiteLLM_GuardrailsTable | New Column | Added team_id text column | - | Migration |

Documentation Updates (14 updates)

- LiteLLM Observatory section added to v1.81.9 release notes - PR #20675

- Callback registration optimization added to release notes - PR #20681

- Middleware performance blog post - PR #20677

- UI Team Soft Budget documentation - PR #20669

- UI Contributing and Troubleshooting guide - PR #20674

- Reorganize Admin UI subsection - PR #20676

- SDK proxy authentication (OAuth2/JWT auto-refresh) - PR #20680

- Forward client headers to LLM API documentation fix - PR #20768

- Add docs guide for using policies - PR #20914

- Add native thinking param examples for Claude Opus 4.6 - PR #20799

- Fix Claude Code MCP tutorial - PR #21145

- Add API base URLs for Dashscope (International and China/Beijing) - PR #21083

- Fix

DEFAULT_NUM_WORKERS_LITELLM_PROXYdefault (1, not 4) - PR #21127 - Correct ElevenLabs support status in README - PR #20643

New Contributors

- @iver56 made their first contribution in PR #20643

- @eliasaronson made their first contribution in PR #20666

- @NirantK made their first contribution in PR #19656

- @looksgood made their first contribution in PR #20919

- @kelvin-tran made their first contribution in PR #20548

- @bluet made their first contribution in PR #20873

- @itayov made their first contribution in PR #20729

- @CSteigstra made their first contribution in PR #20960

- @rahulrd25 made their first contribution in PR #20569

- @muraliavarma made their first contribution in PR #20598

- @joaokopernico made their first contribution in PR #21039

- @datzscaler made their first contribution in PR #21077

- @atapia27 made their first contribution in PR #20922

- @fpagny made their first contribution in PR #21121

- @aidankovacic-8451 made their first contribution in PR #21119

- @luisgallego-aily made their first contribution in PR #19935